By Olenka Van Schendel

In 2018 the enterprise IT silo problem still persists. The disconnect between Digital initiatives and Legacy development continues to drain IT budgets and increases the risk of side-effects in production. Errors detected at this point have a direct business impact: the average cost of a major incident in a strategic software application in production per hour is 1 M$, that’s tenfold the average cost of a hardware malfunction per hour (*). And it’s estimated that 70% of errors in production are simply due to deployment errors, and only 30% due to faulty code. Yet those responsible for today’s diverse IT cultures are lacking visibility and control over the software release process.

What solutions are emerging? Since the last Gartner Symposium, we are seeing Release Management technologies and DevOps converge. Enterprise DevOps is coming of age.

As a mainstream movement, the DevOps community is assuming the operational reponsibility that comes with success. The agility of “Dev” tackles the constraints and corporate policies familiar to “Ops”.

Summary:

- From CI/CD to Enterprise DevOps

- What has been holding DevOps back? Bimodal IT holds the key

- The limits of CI/CD

- From Application Release Automation (ARA) to Orchestration (ARO)

- Enterprise DevOps: Scaling Release Quality and Velocity

- How to transition Legacy systems to DevOps?

- Leveraging existing CI/CD pipelines

- Modernizing your IT assets

From CI/CD to Enterprise DevOps

IT environments today are comprised of of a complex mixture of applications each one made up of potentially hundreds of microservices, containers, and multiple development technologies – including legacy platforms that have proven so reliable and valuable to the business that even in 2018 they still form the core of many of the world’s largest business applications today.

Many CI/CD pipelines have done a fair job in provisioning, environment configuration, and automating the deployment of applications. But they have so far failed in giving the business the answers to enterprise-level questions around business the answers to enterprise-level challenges around new regulations compliance, corporate governance and evolving security needs.

What are called DevOps pipelines today are often custom-scripted and fragile chains of disparate tools. Designed primarily for cloud-native environments, they have successfully automated a repeatable process for getting applications running, tested and delivered.

But most are lacking the technology layer needed to manage legacy platforms like IBM i (aka iSeries, AS/400) and mainframe z/OS, leaving a “weak link” in the delivery process. This siloed approach to DevOps tooling carries the business risk of production downtime and uncontrolled cost.

Solutions are emerging. Listen to SpareBank1‘s experience for a recent example. The next phase in release management is already with us. Enterprise DevOps offers a single, common software delivery pipeline across all IT development cultures and end-to-end transparency on release status. This blog explains how we got here.

What has been holding DevOps back? Bimodal IT holds the key.

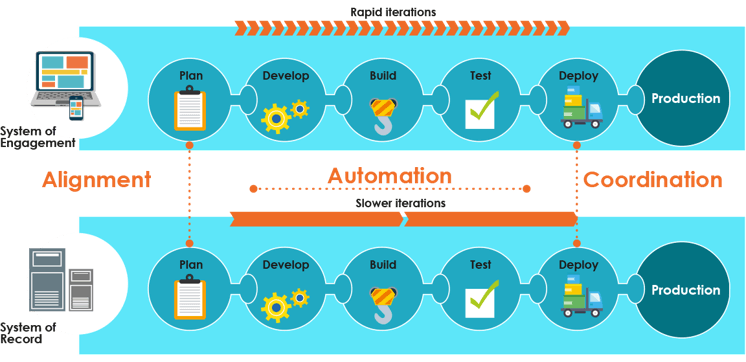

The last few years have seen the emergence of “Bimodal IT“, an IT management practice recognizing two types – and speeds – of software development, and prescribing separate but coordinated processes for each.

Gartner Research defines Bimodal IT as “the practice of managing two separate but coherent styles of work: one focused on predictability; the other on exploration”.

In practice, this calls for two parallel tracks, one supporting rapid application development for digital innovation projects, alongside another, slower track for ongoing application maintenance on core software assets.

According to Gartner, IT work styles fall into two modes. Bimodal Mode 1 is optimized for areas that are more predictable and well-understood. It focuses on exploiting what is known, while renovating the legacy environment into a state that is fit for a digital world. Mode 2 is exploratory, experimenting to solve new problems and optimized for areas of uncertainty. These initiatives often begin with a hypothesis that is tested and adapted during a process involving short iterations, potentially adopting a minimum viable product (MVP) approach. Both modes are essential in an enterprise to create substantial value and drive significant organizational change, and neither is static. Combining a more predictable evolution of products and technologies (Mode 1) with the new and innovative (Mode 2) is the essence of an enterprise bimodal capability. Both play an essential role in the digital transformation.

Legacy systems like IBM i and z/OS often fall into the Mode 1 category. New developments on Windows, Unix and Linux typically fall into Mode 2.

The limits of CI/CD

Seamless software delivery is a primary business goal. The IT industry has made leaps and bounds in this direction with the widespread adoption of automated Continuous Integration/Continuous Delivery (CI/CD). But let us be clear about what CI/CD is and what it is not.

Continuous Integration (CI) is set of development practices driving teams to implement small changes and check in code to shared repositories frequently. CI starts at the end of the code phase and requires developers to integrate code into the repository several times a day. Each checkin is then verified by an automated build and test, allowing teams to detect and correct problems early.

Continuous Delivery (CD) picks up where CI ends and spans the provision-test-environment, deploy-to-test, acceptance-test and deploy-to-production phases of the SDLC.

Continuous Deployment extends continuous delivery: every change that passes the automated tests is deployed to production automatically. By the law of DevOps, continuous deployment should be the goal of most companies that are not constrained by regulatory or other requirements.

The issue is that most CI/CD pipelines are limited in their use to the cloud-native, so-called new technology side of the enterprise. Enterprises today are awaiting the next evolution, one of a common, shared pipeline across all technology cultures. To achieve this, many organizations need to progress from a simple automation to business release coordination, or orchestration.

From Application Release Automation (ARA) to Orchestration (ARO)

Application release automation (ARA) involves packaging and deploying an application/update/release from development, across various environments, and ultimately to production. ARA tools combine the capabilities of deployment automation, environment management and modeling.

By 2020 Gartner predicts that over 50% of global enterprises will have implemented at least one application release automation solution, up from less than 15% in 2017. Approximately seven years old, the ARA solution market reached an estimated $228.2 million in 2016, up 31.4% from $173.6 million in 2015. The market is continuing to grow at an estimated 20% compound annual growth rate (CAGR) through 2020.

The ARA market is evolving fast in response to growing enterprise requirements to both scale DevOps initiatives and improve release management agility across multiple cultures, processes and generations of technology. We are seeing ARA morph into a new discipline, Application Release Orchestration (ARO).

One layer over ARA, Application Release Orchestration (ARO) tools arrange and coordinate automated tasks into a consolidated release management workflow. They further best practices by moving application-related artifacts, applications, configurations and even data together across the application life cycle process. ARO spans cross-pipeline software delivery and provides visibility across the entire software release process.

ARO forms the cornerstone of Enterprise DevOps.

Enterprise DevOps: Scaling Release Quality and Velocity

Enterprise DevOps is still new, and competing definitions are appearing. Think of it as DevOps at Scale.

Like Bimodal IT, large enterprises use DevOps teams to build and deploy software through individual, parallel pipelines. Pipelines flow continuously from development to integration and deployment iteratively. Each parallel pipeline use toolchains to automate or orchestrate the phases and sub-phases of the Enterprise DevOps SDLC.

At a high level the phases in the Enterprise DevOps SDLC can be summarized as plan, analyze, design, code, commit, unit-test, integration-test, functional-test, deploy-to-test, acceptance-test, deploy-to-production, operate, user-feedback.

The phases and tasks of the ED-SDLC can differ within each pipeline or there can be a different level of emphasis on each phase or sub-phase. For example, in bimodal mode 1 on a SOR the plan, analyze & design phases may be of greater importance than in bimodal level 2. In bimodal mode 2 on a SOE the frequency of the commit, unit test, integration test and functional test may be emphasized.

Risk of deployment error is high in enterprise environments because toolchains in each pipeline differ, and dependencies exist between artifacts in distinct pipelines. Orchestration is required to coordinate the processes across the pipelines. Orchestration equates to a more sophisticated automation, with some built in intelligence and an ultimate goal to be autonomic.

How to transition Legacy systems to DevOps?

In response to the challenges of Bimodal IT, we have reached a point where classic DevOps and Release Management disciplines converge.

For over 25 years Arcad Software has been helping large enterprises and SMEs improve software development through advanced tools and innovative new techniques. During this time, we have developed deep expertise in legacy IBM i and z/OS systems. Today we are recognized by Gartner Research as a significant player in the Enterprise DevOps and ARO space for both legacy and modern platforms.

Many ARO vendors assume greenfield developments on Windows, Unix and Linux and hence legacy systems become an after-thought. ARCAD is different; we understand the need to get the most from your companies’ investment in legacy systems over the past decades, and also the demands and challenges of unlocking the value within these legacy applications. ARCAD can ensure you can offer your application owners and stakeholders a practical, inclusive step-by-step solution to deliver both DevOps and ARO for both new and legacy applications vs. an expensive and risky rip-and-replace project.

Leveraging existing CI/CD pipelines

There are a huge number of tools available to organisations to deliver DevOps today. Tools overlap and the danger is “toolchain sprawl”. Yet no one tool can address all needs in a modern development environment. It is essential therefore that all selected tools can easily integrate with each other.

The ARCAD for DevOps solution has an open design and integrates easily with standard tools such as Git, Jenkins, JIRA, ServiceNow. It is capable of orchestrating the delivery of all enterprise application assets, from the most recent cloud-native technologies to the core legacy code that underpins your business.

ARCAD has a proven methodology to ensure we leverage the value in your Legacy applications and avoid a rip-and-replace approach. ARCAD solutions extend and scale your existing DevOps pipeline into a frictionless workflow that supports ALL the platforms in your business.

Modernizing your IT assets

If the future of legacy application assets is your concern, then complementary ARCAD solutions can automate the modernization of your legacy databases and code – increasing their flexibility in modern IT architectures, and making it easy to hire younger development talent and ensure the new hires can collaborate efficiently with older legacy team members.

With 25 years of Release Management experience working with the largest and most respected Legacy and Digital IT Teams across the globe, ARCAD Software has built in security, compliance and risk minimization into all of our offerings. This is exactly the place that DevOps is headed.

(*) Source: IDC

Olenka Van Schendel

WW Marketing Director, Arcad Software

With 28 years of IT experience in both distributed systems and IBM i, Olenka started out in the Artificial Intelligence domain and natural language processing, working as software engineer developing principally on UNIX. She soon specialized in the development of integrated software tooling including compilers, debuggers and source code management systems. As VP Business Development in the ARCAD Software group, she continues her focus on Application Lifecycle Management (ALM) and DevOps tooling with a multi-platform perspective including IBM i.

REQUEST A DEMO

Let’s talk about your project!

Speak with an expert